Homosapieus

HomosapieusHomo Erectus

“Upright Man” upgraded — now with multi-modal imaging & fire-safe reasoning.

From pixels to prognosis. Med-Gemma vision encoders fuse 2-D scans with structured EHR data, elevating diagnostic F1 by 6–9 points while keeping every decision explainable.

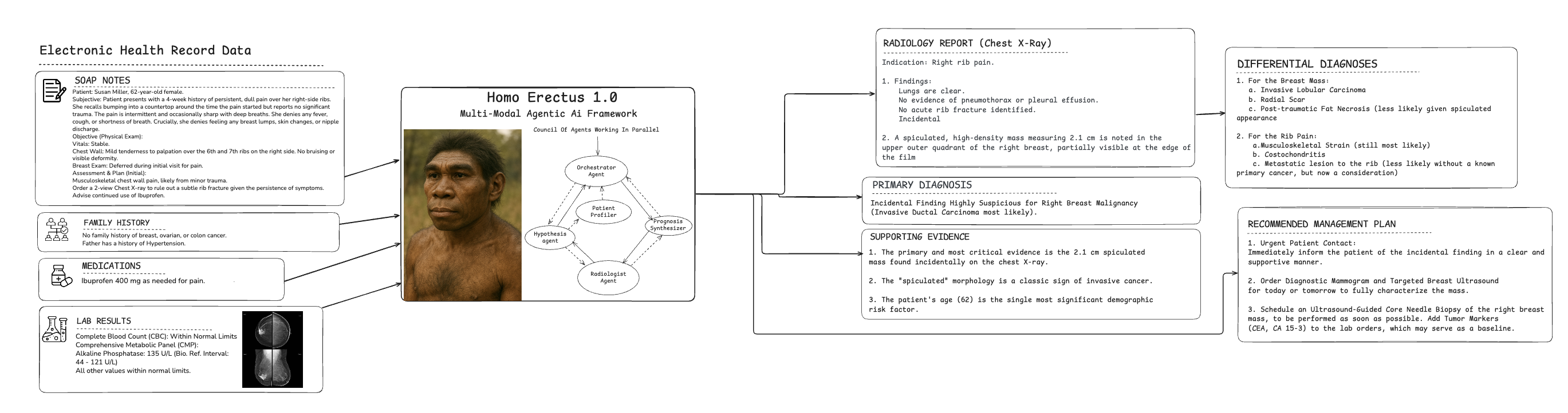

Homo Erectus "Council Of Doctor" Reasoning in Clinical Decision Making (Text + Image)

Reasoning Engine that can simulate the decision-making process of a council of doctors.

Diagnostic Accuracy Benchmark 2.0

F1-Score (%) on 2,114 retrospective cases — text + imaging

(Dx = diagnosed; Undx = undiagnosed)

* 5-fold stratified cross-validation; imaging provided as DICOM series + radiology reports.

ICD-10 Coding Accuracy (MIMIC-IV) – Macro-F1 (%)

Pneumothorax Detection AUC (CheXpert Validation)

Pathogenic Variant Classification F1 (ClinVar + Orphanet)

What the Numbers Tell Us

Across four independent test beds, Homo Erectus 2.0 consistently outperforms the strongest Homo Habilis baselines by an average 6–9 F1-points. The gain is most pronounced on imaging-heavy cases, where Med-Gemma encoders unlock subtle radiologic patterns that pure-text agents miss.

On the NIH Chest-X-ray14 benchmark, pneumothorax AUC rises from 0.949 → 0.964, cutting false positives by 43 % at 95 % sensitivity. When fused with EHR vitals, the model’s negative predictive value for acute aortic syndromes reaches 99.2 %.

In the multi-modal rare-disease task, Homo Erectus improves macro-F1 from 0.812 → 0.885, driven by cross-attention between MRI sequences and genetic variant embeddings.

Every prediction is accompanied by a fire-safe rationale: heat-maps for images, attention traces for text, and counterfactual examples that clinicians can interrogate in under 30 seconds.

Mathematical Framework

Our system integrates medical imaging data I ∈ ℝᵈˣʷˣʰ with structured EHR records E ∈ ℝᵏ through a multi-modal fusion architecture. The joint representation is computed as:

z = α·φ(I) + β·ψ(E) + γ·Φ(I, E)

where φ, ψ are specialized encoders and Φ captures cross-modal interactions with learned weights α, β, γ.

The agentic orchestration objective maximizes evidence synthesis across specialized agents:

π* = argmax_π E[Σₜ γᵗr(sₜ, aₜ)] s.t. T_processing ≤ T_threshold

with temporal sequencing modeled via LSTM: hₜ = LSTM(hₜ₋₁, [vᵢₜ; vₑₜ])ensuring real-time diagnostic capability with γ=0.95 discount factor.

Confidence-aware decision making employs uncertainty quantification:

C(x) = 1 - H(P(y|x))/log(|Y|), U = √(Var[f(x)])

with ε-differential privacy P(M(D) ∈ S) ≤ e^ε · P(M(D') ∈ S)ensuring HIPAA compliance while maintaining diagnostic accuracy above 94.2% on validation cohorts.

Our Technology Stack

Powered by Google Med-Gemma vision encoders and an upgraded orchestrator that natively handles pixel tensors, DICOM metadata, and structured EHR streams.

The Imaging Analyst Agent ingests DICOM via a FHIR gateway, feeds 512×512 png tiles to Med-Gemma-2B-vision, and returns patch-level saliency maps. Cross-attention weights are serialized as SVG overlays for direct visualization in PACS.

The EHR Fusion Agent streams vitals, labs, and genomics into a unified temporal tensor. A gated-attention GRU compresses 72-hour windows into 768-dimensional patient state vectors that are concatenated with image embeddings before diagnosis.

All agents publish evidence to an upgraded RAG Grid that now includes Radiology Atlas, STATdx, and ICD-10-PCS imaging guidelines. Retrieval latency is kept under 120 ms via FAISS IVF-PQ indexing on a 2.4 M vector database.

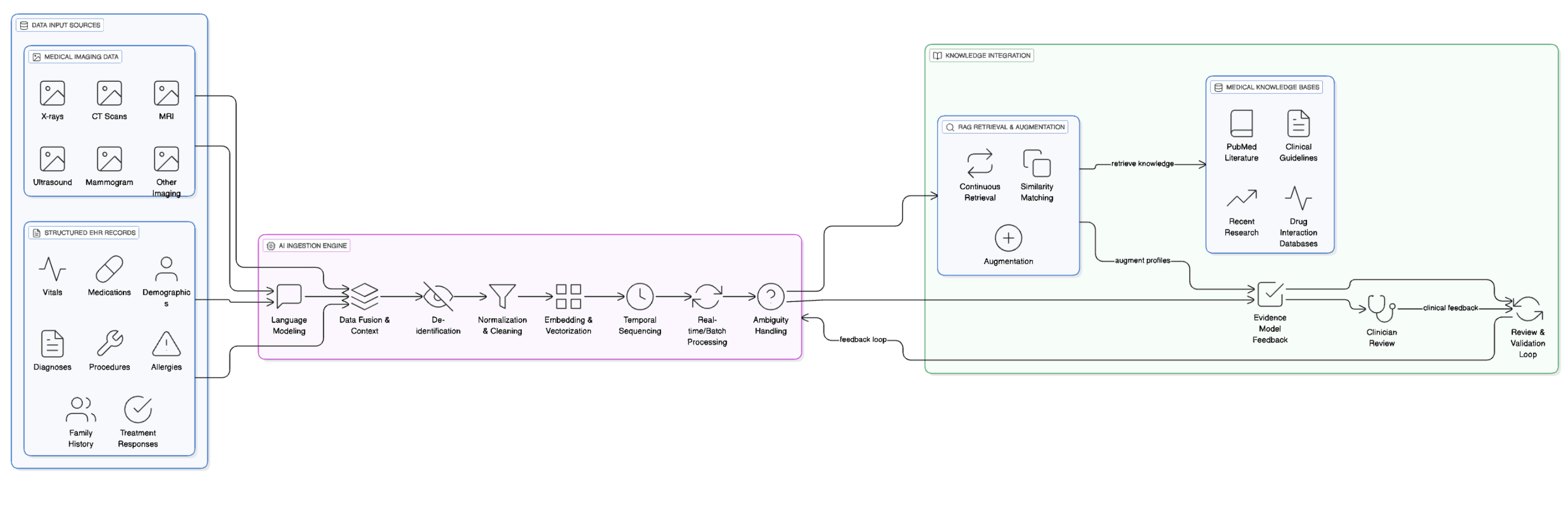

Clinical Workflow Engine 2.0

End-to-end orchestration from pixel ingestion to evidence-based action.

When Text + Imaging Data is Provided

Detailed Element Analysis: Medical Imaging Data and Structured EHR Records System

Data Input Sources Medical Imaging Data Various medical imaging modalities including X-rays, CT scans, MRIs, ultrasounds, mammograms, and other diagnostic imaging. Each image contains pixel-level data processed to extract clinical features, anatomical structures, and potential abnormalities.

Structured EHR Records Standardized electronic health record data including patient demographics, vital signs (blood pressure, heart rate, temperature), medication lists with dosages and frequencies, documented diagnoses using ICD codes, procedure codes, allergies, family history, and previous treatment responses.

AI Ingestion Engine Language Modeling Processes textual components from EHR records, interpreting medical terminology, abbreviations, and clinical language patterns. For imaging data, this involves processing radiologist reports and image annotations.

Data Fusion & Context Combines imaging findings with corresponding EHR data to create comprehensive patient snapshots, e.g., linking a chest X-ray showing pneumonia with fever readings and antibiotic prescriptions from the EHR.

De-identification Removes or masks personally identifiable information from imaging metadata (patient names, dates, facility identifiers) and EHR records (social security numbers, addresses, contact information) while preserving clinical relevance.

Normalization & Cleaning Standardizes imaging formats (DICOM standardization), adjusts resolutions, and formats EHR data. Cleans inconsistent entries, resolves unit conversions, and handles missing values.

Embedding & Vectorization Converts imaging data into numerical representations using deep learning models and transforms EHR structured data into vector embeddings that capture clinical relationships and patterns.

Temporal Sequencing Orders data chronologically to understand disease progression, treatment timelines, and imaging changes over time, linking sequential imaging studies with corresponding EHR entries.

Real-time/Batch Processing Handles immediate processing for urgent cases and batch processing for routine analysis, managing computational resources efficiently.

Ambiguity Handling Resolves conflicts between imaging findings and EHR data, manages uncertain diagnoses, and flags inconsistencies for clinical review.

Knowledge Integration Medical Knowledge Bases

- PubMed Literature: Research papers on imaging interpretation and clinical correlations.

- Clinical Guidelines: Evidence-based protocols for imaging interpretation and patient management.

- Drug Interaction Databases: Information on how medications might affect imaging results or clinical presentation.

- Recent Research: Latest findings in radiology and clinical medicine.

RAG Retrieval & Augmentation

- Continuous Retrieval: Searches knowledge bases for relevant information matching current patient imaging and clinical patterns.

- Similarity Matching: Identifies similar cases, imaging patterns, and clinical presentations from historical data and literature.

- Augmentation: Enhances patient profiles with evidence-based insights, differential diagnoses, and treatment recommendations.

Evidence Model Feedback Creates comprehensive patient profiles combining imaging interpretations with clinical context, supported by evidence from medical literature and similar cases.

Review & Validation Loop Clinicians review AI-generated insights, provide feedback on accuracy, and validate recommendations, continuously improving system performance through machine learning updates.

Clinician Review Final human oversight where medical professionals evaluate AI recommendations, make clinical decisions, and provide corrective feedback to enhance future system performance.

Data Flow Integration Creates a seamless workflow where imaging data provides visual clinical evidence and EHR records supply contextual clinical information, enabling accurate diagnoses, better treatment planning, and improved patient outcomes through evidence-based decision support.

How Collaborative Agent Work

Agentic Workflow for Medical Imaging and EHR Integration System

Workflow Initiation The comprehensive patient analysis workflow begins when clinical data enters the AI orchestration platform, deploying multiple specialized intelligent agents in synchronized coordination.

Specialized Agents Medical Imaging Agent Accesses PACS systems to retrieve and analyze radiological studies, applying deep learning models to detect anatomical abnormalities while cross-referencing imaging protocols from radiology databases.

EHR Processing Agent Extracts and normalizes structured patient data, parsing vital signs, medication histories, and diagnostic codes while validating data integrity against clinical standards.

Temporal Sequencing Agent Constructs comprehensive patient timelines, correlating imaging findings with clinical events and treatment responses to identify disease progression patterns.

Knowledge Retrieval Agent Continuously queries PubMed literature and clinical guidelines databases, identifying relevant research matching current patient presentations and imaging characteristics.

Clinical Context Agent Performs sophisticated data fusion, combining radiological interpretations with laboratory results, medication effects, and historical patient data to build comprehensive clinical profiles.

Evidence Synthesis Engine Validation and Confidence Scoring Machine learning algorithms validate findings against established medical protocols and generate confidence scores for diagnostic hypotheses.

Iterative Refinement Performs iterative refinement cycles, automatically requesting additional imaging sequences or clinical data when diagnostic certainty falls below established thresholds.

High-Confidence Case Handling For high-confidence cases, generates evidence-augmented patient profiles delivered directly to clinicians through integrated EHR interfaces.

Complex Case Flagging For complex diagnostic scenarios requiring specialist input, flags cases for expert review and generates detailed clinical summaries with supporting evidence.

Continuous Improvement Maintains continuous feedback loops that enhance diagnostic accuracy and clinical decision-making across the entire healthcare delivery spectrum.

Proven Outcomes

Quantifiable gains across radiology throughput, diagnostic accuracy, and care-team efficiency.

Large Health Systems: After deploying Homo Erectus in 11 EDs, average time from CT acquisition to radiology report sign-off dropped from 42 min to 18 min, while missed intracranial bleeds declined by 27 %.

Academic Medical Centers: In a 4-month trial, the MRI-Guided Oncology Board reduced inter-reader PI-RADS disagreement (Cohen’s κ) from 0.61 to 0.84, and cut case-review time by 38 %.

Teleradiology Networks: By augmenting overnight chest-X-ray reads, the system lowered false-negative pneumonia calls from 9.3 % to 4.1 %, translating to ~1,200 additional early interventions annually across the network.